Algebraic Machine Learning is a new Artificial Intelligence paradigm that can learn from the data and adapt to the world like Neural Networks do, combined with the power for explainability and controllability of Symbolic AI.

Algebraic Machine Learning is a new Artificial Intelligence paradigm that can learn from the data and adapt to the world like Neural Networks do, combined with the power for explainability and controllability of Symbolic AI.

Algebraic Machine Learning is a new Artificial Intelligence paradigm that combines user-defined symbols with self-generated symbols that permit AML to learn from the data and adapt to the world like neural networks do, combined with the power for explainability of Symbolic AI.

AML is a purely symbolic approach and neither uses neurons nor is a neuro-symbolic method. Algebraic Machine Learning does not use parameters and it does not rely on fitting, regression, backtracking, constraint satisfiability, logical rules, production rules or error minimization.

We build more robust and transparent learning systems minimizing the well-known limitations of other existing approaches such as statistical biases, catastrophic forgetting and shallow learning.

Instead of "fixing" any of the existing approaches we have developed a new AI formalism inspired by Model Theory, a mathematical discipline that combines Abstract Algebra with Mathematical Logic. We felt that a new formalism was needed if we wanted to ensure transparency, control and safety from first principles. These characteristics allow for a better cooperation between humans and machines with the constraints and safeguards one may want to their interaction. We believe this formalism can be a basis for a more “humane” AI.

We are a startup based in Madrid working in a close collaboration with the Champalimaud Research Foundation in Lisbon. We are part of a European consortium of companies and research institutions investigating AML, including the leading centers in artificial intelligence DFKI and INRIA.

Our team has more than two decades of experience building succesful machine-learning based products such as DeNovoX peptide de novo sequencing software or idTracker animal tracking software.

At Algebraic AI we have developed an open AML engine for building models and performing inference. It has been designed with a focus for community-driven exploration and facilitating research in AML.

You can download the AML engine now from our Github repository.

Martin-Maroto, F., & Abderrahaman-Elena, N., & Mendez, D. & de Polavieja, G. (2025). Semantic Embedding in Semilattices. arXiv:2502.19944.

Statistics and Optimization are foundational to modern Machine Learning. Here, we propose an alternative foundation based on Abstract Algebra, with mathematics that facilitates the analysis of learning. In this approach, the goal of the task and the data are encoded as axioms of an algebra, and a model is obtained where only these axioms and their logical consequences hold. Although this is not a generalizing model, we show that selecting specific subsets of its breakdown into algebraic atoms obtained via subdirect decomposition gives a model that generalizes. We validate this new learning principle on standard datasets such as MNIST, FashionMNIST, CIFAR-10, and medical images, achieving performance comparable to optimized multilayer perceptrons. Beyond data-driven tasks, the new learning principle extends to formal problems, such as finding Hamiltonian cycles from their specifications and without relying on search. This algebraic foundation offers a fresh perspective on machine intelligence, featuring direct learning from training data without the need for validation dataset, scaling through model additivity, and asymptotic convergence to the underlying rule in the data.

Martin-Maroto, F., & Ricciardo, A., & Mendez, D. & de Polavieja, G. (2023). Semantic Embedding in Semilattices. arXiv:2311.01389.

We extend the theory of atomized semilattices to the infinite setting. We show that it is well-defined and that every semilattice is atomizable. We also study atom redundancy, focusing on complete and finitely generated semilattices and show that for finitely generated semilattices, atomizations consisting exclusively of non-redundant atoms always exist.

Martin-Maroto, F., & de Polavieja, G. (2022). Semantic Embedding in Semilattices. arXiv:2205.12618.

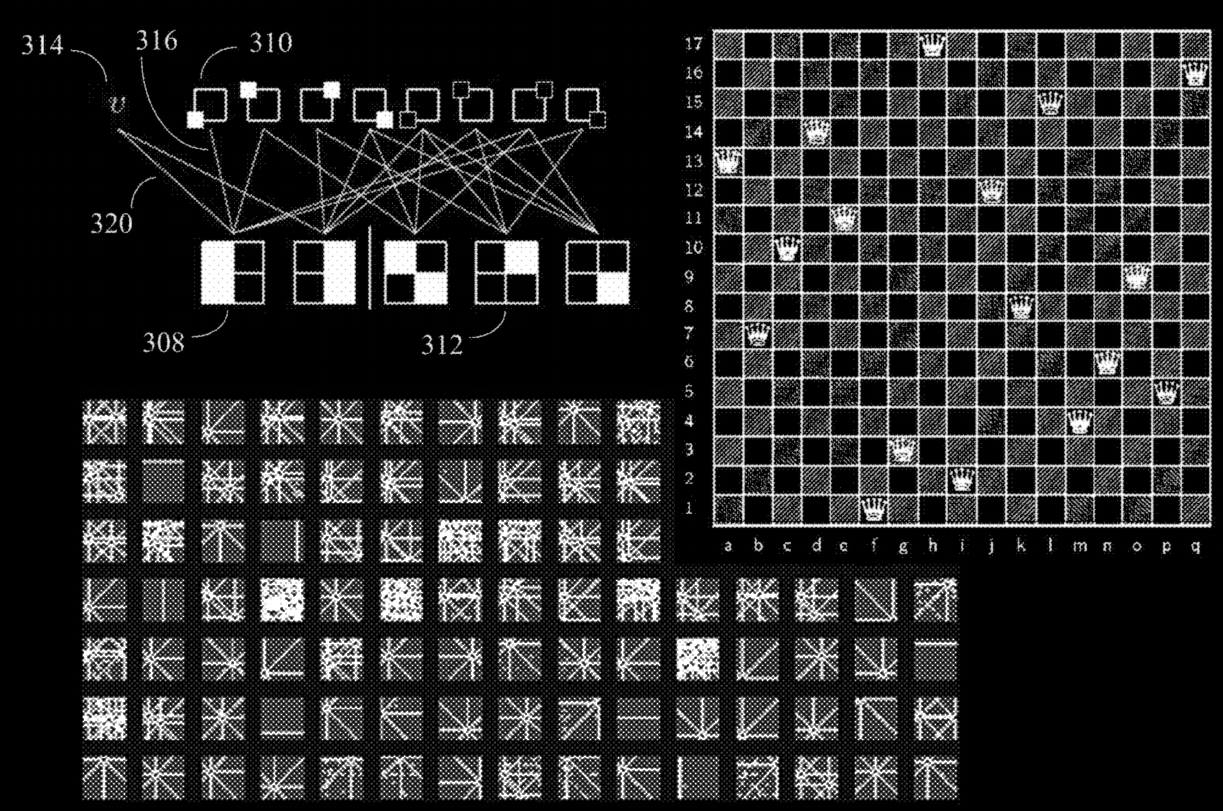

Here we give a formal definition of a semantic embedding in a semilattice which can be used to resolve machine learning and classic computer science problems. Specifically, a semantic embedding of a problem is here an encoding of the problem as sentences in an algebraic theory that extends the theory of semilattices. We use the recently introduced formalism of finite atomized semilattices to study the properties of the embeddings and their finite models. We give examples of semantic embeddings that can be used to find solutions for the N-Queen's completion, the Sudoku, and the Hamiltonian Path problems.

Martin-Maroto, F., & de Polavieja, G. (2021). Finite Atomized Semilattices. arXiv:2102.08050.

In this work we present a formal mathematical description of finite atomized semilattices, an algebraic construction we used to define and embed models in Algebraic Machine Learning (AML). Among others, concepts such as the full crossing operator or pinning terms, that play an important role in AML, are formalised.

Martin-Maroto, F., & de Polavieja, G. (2018). Algebraic Machine Learning. arXiv:1803.05252.

This is the foundation of Algebraic Machine Learning (AML) and where the main concepts of the methodology are introduced. As an alternative to statistical learning, AML offers advantages in combining bottom-up (data) and top-down (pre-existing knowledge) information, and large-scale parallelization.

In AML, learning and generalization are parameter-free, fully discrete and without function minimization. We introduce this method using a simple problem that is solved step by step. In addition, two more problems, hand-written character recognition (MNIST) and the Queens Completion problem, are explored as examples of supervised and unsupervised learning, respectively.

The method consisting of independently calculating discrete algebraic models of the input data in one or many computing devices, and in asynchronously sharing components of the algebraic models among the computing devices without constraints on when or on how many times the sharing needs to happen. Each computing device improves its algebraic model every time it receives new input data or the sharing from other computing devices, thereby providing a solution to the scaling-up problem of machine learning systems.